-

Make sure your pipeline is solid end to end

-

Start with a reasonable objective

-

Add common-sense features in a simple way

-

Make sure that your pipeline stays solid

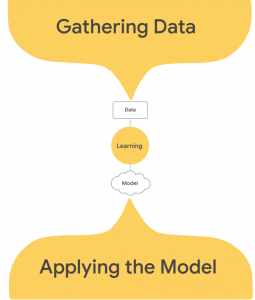

ONE: Make sure your pipeline is solid end to end

TWO: Start with a reasonable objective

If you know me at all, you know that this is something I find essential to get right, and as early as possible. The objective is the thing that your data science is designed to optimize. Want to increase personalization? Then the objective should be to show the right product to the right person at the right time. But early on, popularity might be a good proxy for your objective that’s easy to realize when there aren’t much data available. Then, as more data become available, you can implement more complex models. The objective never changes, but the model can grow in complexity, increasing your objective of personalization. Without the right objective, your data scientists will be working on the wrong problem.

THREE: Add common-sense features in a simple way

FOUR: Make sure that your pipeline stays solid

We’re experts in these methodologies. Don’t hesitate to contact us if you would like to learn more about how we’ve helped companies big and small set up their data science initiatives for success.

Links:

-

The PDF can be found here: http://martin.zinkevich.org/rules_of_ml/rules_of_ml.pdf

-

The (newer) web page is here: https://developers.google.com/machine-learning/guides/rules-of-ml/

Zank Bennett is CEO of Bennett Data Science, a group that works with companies from early-stage startups to the Fortune 500. BDS specializes in working with large volumes of data to solve complex business problems, finding novel ways for companies to grow their products and revenue using data, and maximizing the effectiveness of existing data science personnel. https://bennettdatascience.com