Are we losing our creativity? Our individuality?

As I type this, words are being suggested for me. All I need to do is hit TAB and the next-predicted word is instantly typed for me. Transparently, I feel on occasion that the word or phrase suggested is a refreshingly different way to say what I wanted to relay. So I hit TAB and there it is. Each time I do this I feel grateful for the help and the time saved. But what is happening to my intent? Is it always preserved? I know the answer for myself is clear; sometimes.

How much intent is changed when that “sometimes” is multiplied across all the people using such a service every day, around the world? And what’s the consequence of that change in intent? It’s not zero; it can’t be. Is it negligible?

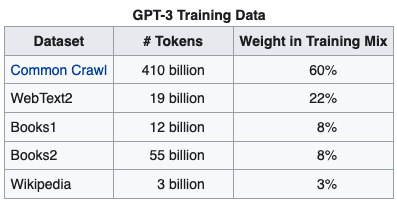

The language models that predict what we’ll type next were trained on huge collections of text that are generally publicly available for such training. Where do we find a huge collection of text like this? The answer for one of the biggest language models, GTP-3 can be found on Wikipedia.

Common Crawl accounts for 60% of the model training data. Common Crawl is a nonprofit 501(c)(3) organization that crawls the web and freely provides its archives and datasets to the public. Its web archive consists of petabytes of data collected since 2011. It completes crawls generally every month.

There’s where most of the auto-complete power comes from, text that’s been written on (English language) public websites everywhere.

But what sort of fundamental transformations occur based on wide adoption of this and other generative A.I.? For example, what happens when teachers use auto-complete, or chatbots or personalized learning systems to help in the classroom? Would this allow for larger classes with a perceived higher level of personalization? This article touches upon this and asks the question: How Should We Approach the Ethical Considerations of AI in K-12 Education?

And what happens when A.I. is used for a single purpose for a long time? Imagine that everyone writing articles for websites uses autocomplete. After a decade of doing so, most of the articles considered for training the predictive algorithm would be biased by their own effects! We see this all the time in practice. Wouldn’t we then see a homogenization of language?

We’re relying more and more on A.I. to automate repetitive tasks. As this trend expands, it’s essential to ask a few fundamental questions:

- What data were used to train the A.I. making the decision?

- Were the data biased for or against a certain marginalized (or not) population?

- What are the long-term consequences of using A.I.-generated suggestions or automation?

In this month’s Tech Tuesday, the articles below dive into some interesting developments in A.I.-driven automation, from robots that comprise an entire kitchen to predicting insurance costs based on perceived driver safety.

I hope you have a wonderful month!

Best,

-Zank

Of Interest

Cala Raises €1M for its Vegetarian Pasta Making Robot

Would you eat food prepared by a robot? Would it taste as good? Do fuzzy things like human care, intent, or touch matter in how we interpret our satisfaction with a meal? Would you feel less obliged to leave a tip? If the food tastes bad, what sort of system is in place to handle complaints? Wow, this one raises a lot of questions. Take a look at this incredible new trend in food preparation.

What Tesla’s Data Crash Revealed

Dutch investigators have been able to reverse-engineer Tesla’s data logs following a crash, and found that the information stored by a car is greater than what’s typically handed over by the company, according to The Verge. The discovery could have broad implications in the United States, where Tesla is being probed by federal traffic and highway investigators. Read more here.

Tesla Launches its Insurance Using ‘Real-Time Driving Behavior’

Drivers who are less likely to be in a car accident deserve lower insurance rates, right? But how can we possibly know how safe a driver is. Enter sensors; the kind found all over self-driving cars, like the ones Tesla manufactures. What’s not covered here is where this technology might go. For example, should healthier people pay less in health insurance? How can we objectively measure such things. There will be a lot of evolution in this space over the coming years as monitoring and AI become more ubiquitous. Read more here.

DeepBrainAI is Creating Fascinating Fakes

Click here, scroll down a bit, and watch the video of A.I. Anchor Kim Ju-Ha. Do you believe it? Would you watch the news this way? DeepBrainAI has several products it’s using in South Korea to automate typically human performed tasks, such as reporting the news, reporting store regulations at 7-Eleven, greeting visitors at a company kiosk, or even giving a presidential speech. These are truly incredible fakes worth taking a look!